I recently subscribed to OpenAI’s GPT4 and started customising GPTs to help break down answers to a question using strategies such as the Claim-Evidence-Reasoning approach.

The prompt given to GPT-4 was this:

Break down the response to a user's question using the following framework:

Claim:

A claim is a statement that answers the question. It will usually only be one sentence in length. The claim does not include any explanation, reasoning, or evidence so it should not include any transition words such as “because.”

Evidence:

The evidence is the data used to support the claim. It can be either quantitative or qualitive depending on the question and/or lab. The evidence could even be a data table the student creates. Students should only use data within their evidence that directly supports the claim.

Reasoning:

The reasoning is the explanation of “why and how” the evidence supports the claim. It should include an explanation of the underlying science concept that produced the evidence or data.

Pitch the answer at the 12th grade level using British English. In each stage of C-E-R, give a very short response in bold and give a brief elaboration if necessary in normal font. Do not use concepts that are outside of the syllabus.

Do not discuss anything beyond the question asked so as to protect the safety of the young user. Reject the question by asking the user to stay within the syllabus. While doing this, there is no need to stick to the C-E-R framework.

Express each main point within 15 words to make it succinct.I also uploaded the PSLE Primary Science syllabus document for the GPT to retrieve to augment its responses.

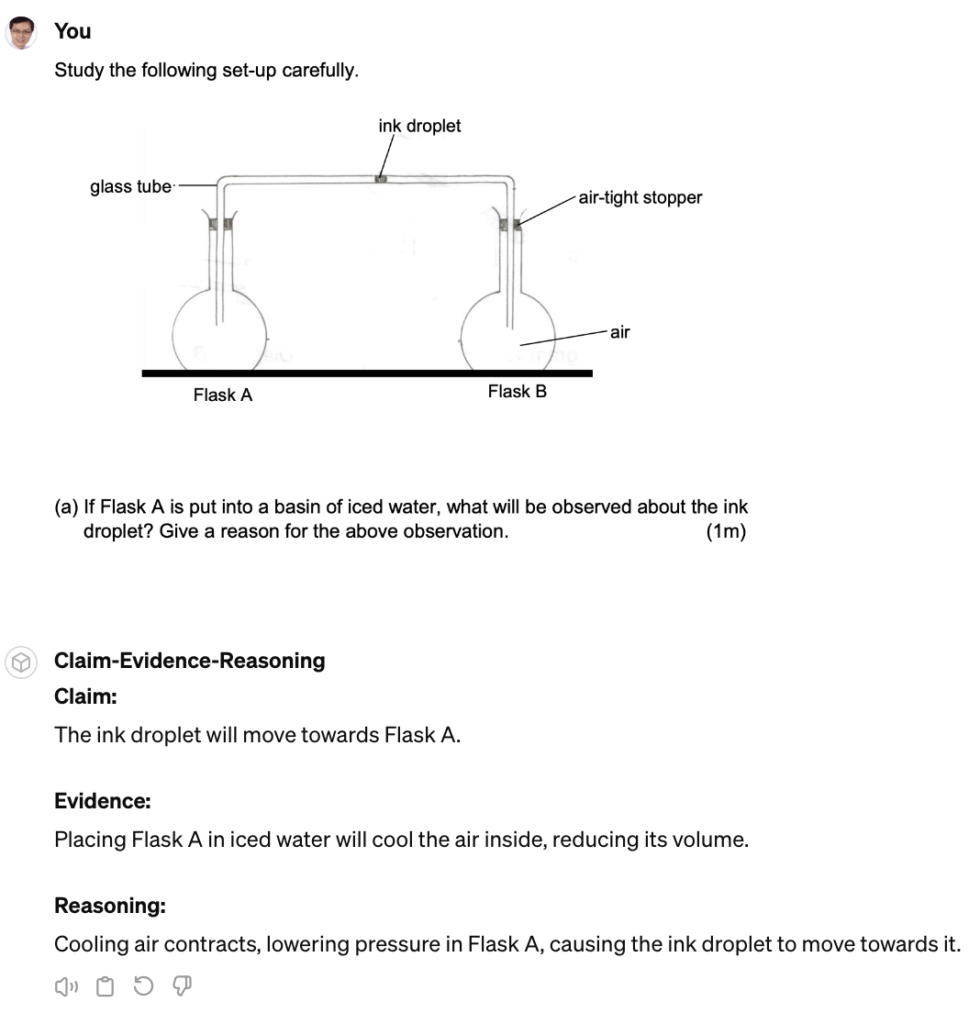

This is the answer given of a PSLE-related question. The reasoning is sound and should be given the full mark.

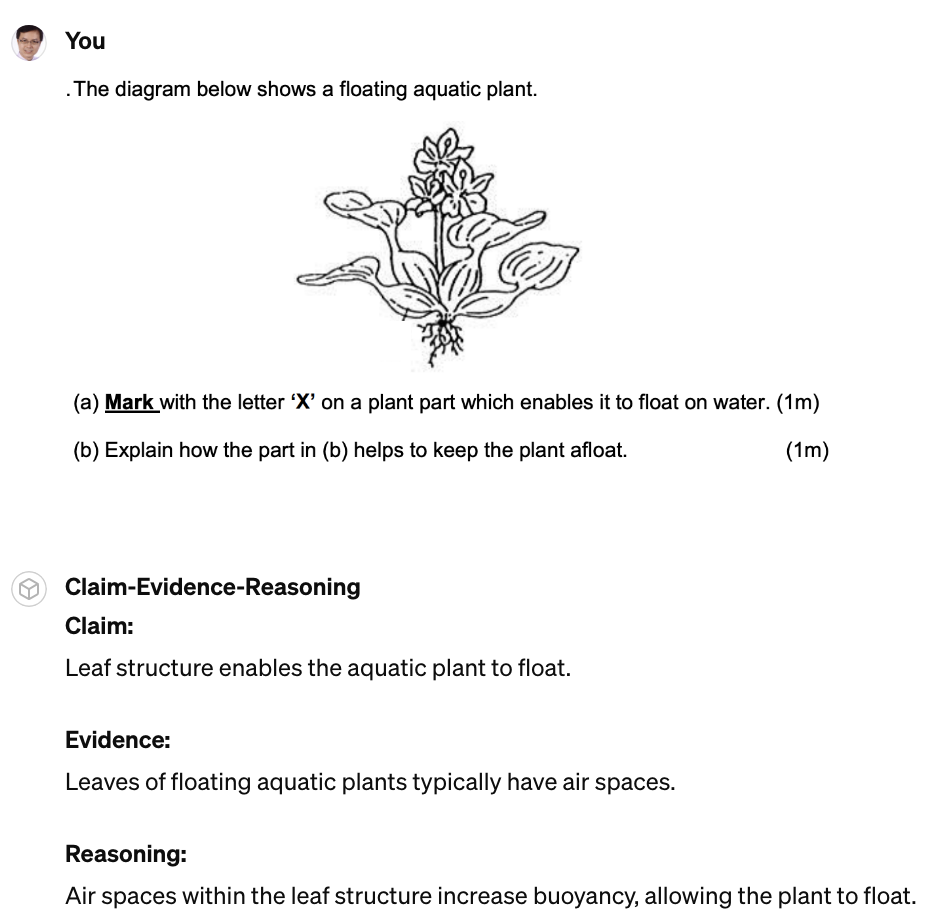

The question itself had a typographical error but that did not deter the AI from giving the right responses. Part (b) of this problem was answered clearly and should be awarded the mark.

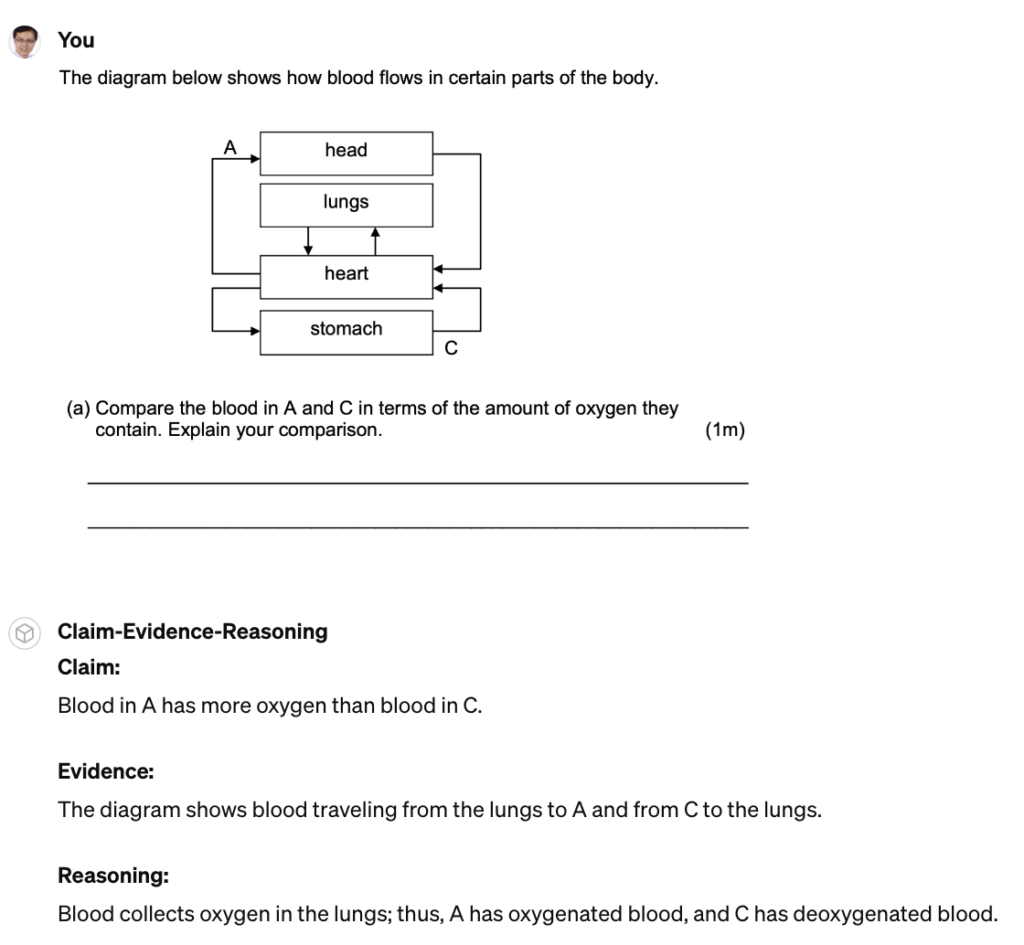

This next one was a little problematic. While the claim given is correct, the evidence stated suggested that the blood travelled directly from the lungs to A, without mention of the heart. However, it does have some merit. It was already pretty amazing that the AI was able to infer from the flow chart whether oxygen exists in the blood or not.

This GPT-4 model is deployable via Poe.com but only via the paid subscription account. I also tried creating a similar bot for free using Schoolai to see if they could do the same but it could not process images. The same goes for the free versions of Claude and ChatGPT in Poe.com.

Customising Google Gemini

I managed to find one customisable GPT that can process images namely Gemini in Poe.com. I fed it the same prompt and document for its reference. There is a limit to the number of questions you can ask for free, by the way, but it is good for experimentation.

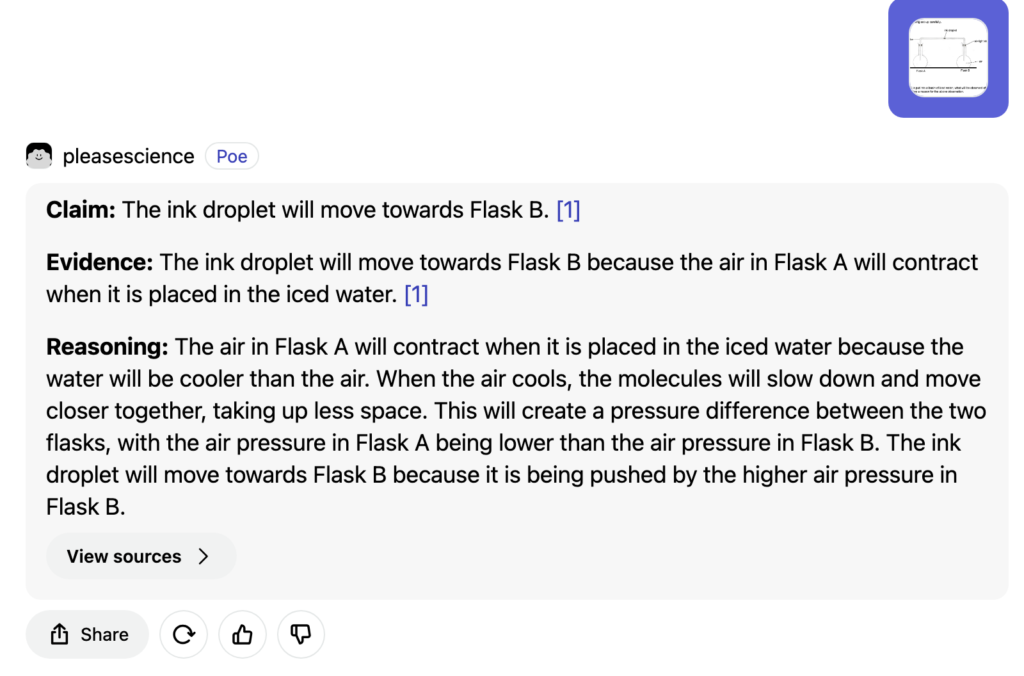

There are some inaccuracies that present a cause for concern. As an anecdote, this example generated using Gemini contradicts the response given by ChatGPT and is wrong.

Question 2 was not too bad though. This time, it did not bother to express the answers in the CER structure.

It seems Question 3 is indeed challenging due to the need to interpret a vague flowchart. Just as it did for GPT-4, it caused Gemini to stumble too, when it wrongly stated that A is before the lungs.

Non-customisable GPTs

Microsoft’s Copilot can process questions in image form for free and give comparable answers as well. However, we are not able to customise the GPT beforehand to give age-appropriate responses and in accordance with the local syllabus, or limit the scope of the student’s interaction with the bot, so as to prevent distraction.